Recent event

IMMUNE 2 INFODEMIC Project Coproduction Workshop

How can we immunise our citizens against future infodemics?

European citizens are all exposed to an infodemic spreading increasingly, which can severely impact their democratic participation and engagement. This may include disinformation, misinformation, fake news, and other types of interference on different issues related to public life. A pre-emptive approach needs to be taken to decrease the worsening impact, such as using vaccination against the spread of the pandemic. IMMUNE 2 INFODEMIC aims to immunise EU citizens/residents against disinformation and misinformation on selected themes by empowering and equipping them with several easy-to-use tools. The project consortium formulates and co-produces 3 instruments (vaccines): digital literacy, media literacy, and critical thinking, and applies these instruments to 3 selected hot themes (boosters): elections, COVID-19 and migration.

The Coproduction Workshop aimed to initiate the coproduction of materials (videos, infographics, presentations) that will be used in future events, workshops and e-learning settings against dis-/misinformation based on the frame defined at the initial framework workshop. We brought educators, researchers, practitioners, media and communication experts together to be involved in the product design at the initial phase.

Three workshops were organised as a part of this event in two days. They achieved good results and brought innovative ideas and inputs for the project.

- Workshop – 1 / Learning Objectives and Outcomes

- Workshop – 2 / How to immunise against infodemics

- Workshop – 3 / Potential use of AI in inoculation

The first workshop presented some of the defined objectives and learning outcomes to the audience. Based on selected cases related to elections, the participants were asked about ways to reach out to citizens and get them interested and missing key skills (if any) that needed to be taken into account in the project. The outcomes of the workshop can be summarised as follows.

Key skills to focus:

- Understanding that Social Media is not real life: Skills to understand how marketing is done and how micro-targeting is used in advertising on social media.

- Supportive skills for checking facts and sources and for cross-checking and managing one’s privacy on Social media.

- Knowledge on privacy problems and how to control privacy settings

- Knowledge on the use and capacity of Artificial Intelligence

How to bring the content to the specific target groups of youth and seniors:

- Engaging content: Short videos, interactive content, visibility for this project, use of a face (real or avatar) that can engage online with the participants ( a facthacker).

- Engaging events: visit homes or senior clubs/centres for seniors and collaborate with local organisations and communities.

- More interactivity: Inviting the target group members for co-creation and planning the material and cases (ie, co-creating infographics).

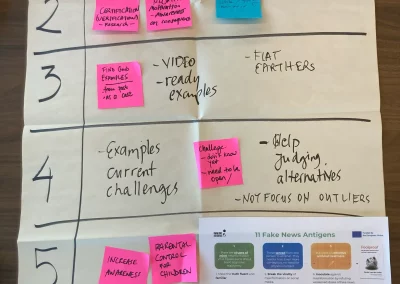

This workshop investigated methodologies selected from the book ‘Foolproof’ by Prof. Sander Van Der Linden and the participants’ real-life experiences in countering possible mis/disinformation. Focused discussions were made on how to use Foolproof’s 11 fake news antigens for project materials:

- Make the truth fluent and familiar: Simplified content; use of repetition to boost memory; use of influencers to spread accurate information; awareness engagement.

- Incentivize accuracy: Use of certification; use of negative motivation creating awareness for the consequences of spreading false information.

- Identify and resist the seven traits of conspiratorial thinking: Demonstrate the ability to identify and resist through cases that are from the past; Use short to medium length videos to help push forward the message; use the case of Flat Earthers.

- Minimise the continued influence of misinformation: Use examples based on current challenges faced by the target audience; challenge preconceived notions; Help judge the validity of information by judging it in comparison to alternative information.

- Break the virality of misinformation on social media: Increase awareness on the ease with which misinformation travels on social media; Parental Control; neutrality throughout the material creation process and during the educating of the material; systematic testing for analysing the accuracy of information.

- Avoid echo chambers and filters: Get to know your bubble; feed the algorithm knowingly by setting your media consumption to receive recommendations from reputable sources; follow opposite views and consume media from all perspectives to receive a more rounded appreciation for the arguments made.

- Be aware of micro-targeting of susceptible individuals open to persuasion: Understanding to what extent micro-targeting is possible and how to prebunk the algorithms for and knowing how much google knows about individualities; the emotional experience; Describe cases via personal peer experience; organise meetings (or get-togethers) especially with youth to inform and educate them about reality; inform your family especially children.

- Inoculate against misinformation by refuting weakened doses of fake news: Practice makes perfect; test and trial apps help a lot; How do you control that people reach the right info, and not contribute spreading disinfo; Taking together with micro targeting.

- Identify and prebunk the manipulation by discrediting, emotion, polarisation, impersonation, conspiracy, and trolling.

- Help spread inoculation against misinformation. Exploring in which ways would be the best to teach the target groups considering the goal of the project.

- Inoculate friends and family.

An additional workshop was organised with AI developers and experts focusing on the potential use of Artificial Intelligence (AI) for spreading mis/disinformation in the near future (2 years) and for inoculating citizens via the project materials. The first part of the workshop focused on investigating possible techniques to spread false information using AI. The following part searched for preventing / countering techniques against the two spreading techniques chosen from the first round. The main aim was to find good cases to incorporate in the project materials as future potential cases. The brainstorming discussions focused on the following two potential cases:

- Autonomous Disinformation Generator using generative AI techniques: It can create large amounts of targeted disinformation in potentially high-risk areas in the near future. This would require written AI to be able to detect and combat this potential capacity by being able to analyse trends and which target groups / locations have been manipulated and in which ways. We can also teach AI to be able to score truthfulness on historical references.

- False information spreading through AI-generated deep fake influencers (fakefluencers) and other spreaders: For prevention, AI can do reverse image search for seeking the original sources of images in order to reveal inconsistencies so that it can detect fake images. AI can be taught with detection tools, and to display all perspectives about a storyline.

Schedule

30 May 2023

Press Club, Rue Froissart 95,

1040 Brussels, Belgium

Coffee and Registration

09:30 - 10:00

Opening and Keynote speech

Silvia López Arnao, Legal and Policy officer at European Commission/ DG JUST

Ivan Botoucharov, Political Adviser at European Parliament

10:00 - 10:15

Immune 2 Infodemic project introduction

Beyond the Horizon ISSG

10:15 - 10:20

Workshop 1 – Learning Objectives and Curriculum

FaktaBaari

Laurea University of Applied Sciences

10:20 - 11:20

Coffee Break

11:20 - 11:30

Workshop 2 – E-learning for Immunising against Infodemics

Beyond the Horizon ISSG

Dare to be Grey

11:30 - 12:30

Lunch and Networking

12:30 - 13:00

Schedule

20 June 2023

Da Vincilaan 1,

1930 Zaventem, Belgium

Light Meal + Introduction

Prof. Sander van der Linden – Foolproof (Video presentation)

12:00 - 12:15

Workshop Game – How can We Utilise AI and Algorithms in Different Scenarios?

● 30’ 1st Round: Investigating Techniques to Spread Disinformation

● 25’ 2nd Round: Exploring Ways to Prevent and Cope With Disinformation

● 5’ Closing Remarks and Discussion