- Abstract

The overall objective of this paper is to provide insight for the Multi-Space Analysis Model (Network Analysis) used in the Smart Navigator System at Hybrid Core; and visualise a state-of-the-art for the model structure for further studies. The model design will illustrate and explain how the model provides enhanced and superior accuracy by discovering hyperconnectivity and extracting relations among predefined data domains such as economy, business, politics, security, environment, societal and their neurons (nodes). Furthermore, the model would focus on possible relation extraction methodologies and particularly offers ways to transition from ruled-based/pattern-based approaches to neural-based methods.

Key Words: Network Analysis; Decision Systems; Hybrid Intelligence; Neural Networks.

- Introduction:

The Smart Navigator System has multidimensional big data informatics consists of eight specific data space (domains), namely economic, diplomatic, political, security, defence, business, environment and societal. This network of data spaces includes both vast amounts of data and an extensive range of data sources. Using advanced data analytic tools with these large-scale data sources offers a unique insight for understanding what happened and why it happened. However, getting future-oriented deep insight and gaining ability of smart operations via descriptive analytics and simulations, it is essential to analyse this network of networks in data spaces and recognise and categorise connectivity & relations. To this end, dynamic ontology used in the Smart Navigator System provides hybridization of recognition models for events, objects, and documents then provides ground for hyperconnectivity detection, or as commonly known, relation extraction.

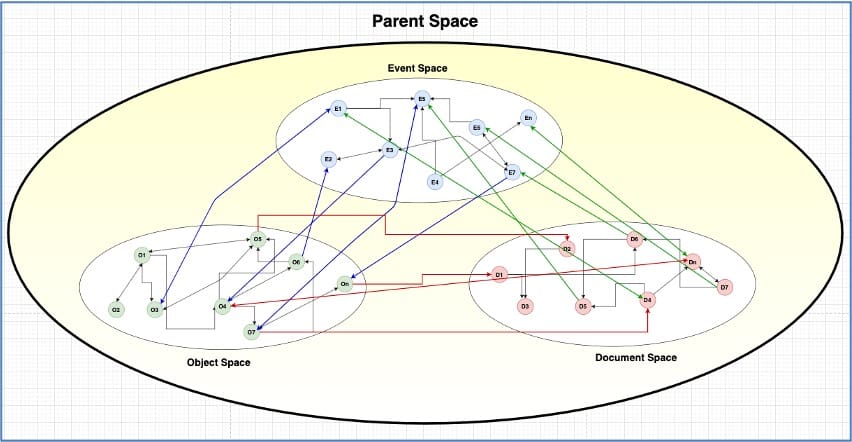

Against this backdrop, in conjunction with the dynamic ontology structure each parent space has event, object, and document sub-spaces; accordingly, there are relations among nodes of event, object and document sub-spaces and between sub-spaces as well. Figure 1 visualises parent space structure and hypothetical relations in a space.

Figure 1: Parent Space Structure and Hypothetical Relations (Image by the Author)

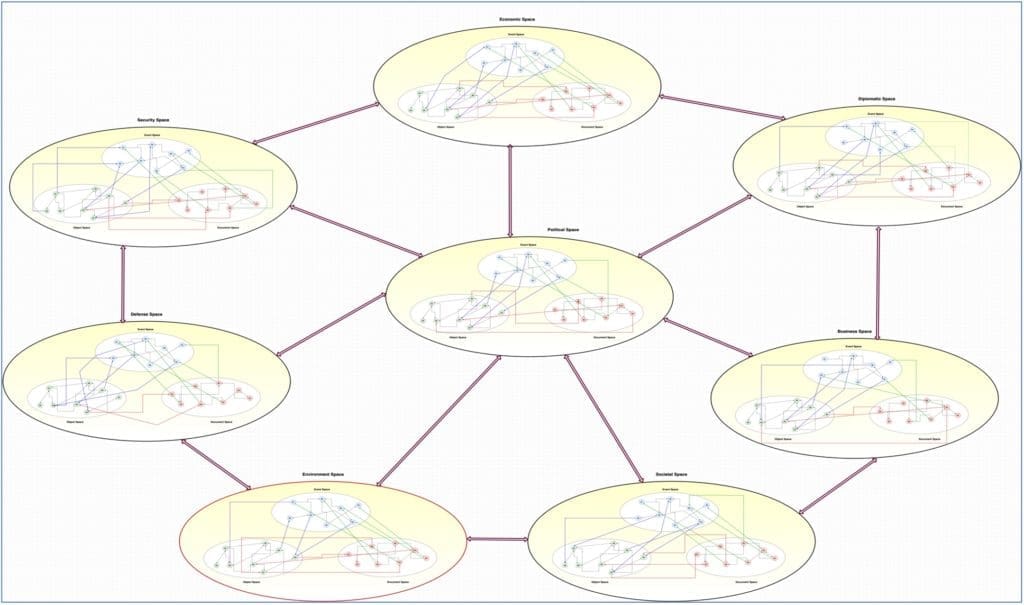

Further, Figure 2 provides a general overview of the whole Multi-Space Analysis Model (Network Analysis) Architecture.

Figure 2: Multi-Space Analysis Model Hypothetical Architecture (Image by the Author)

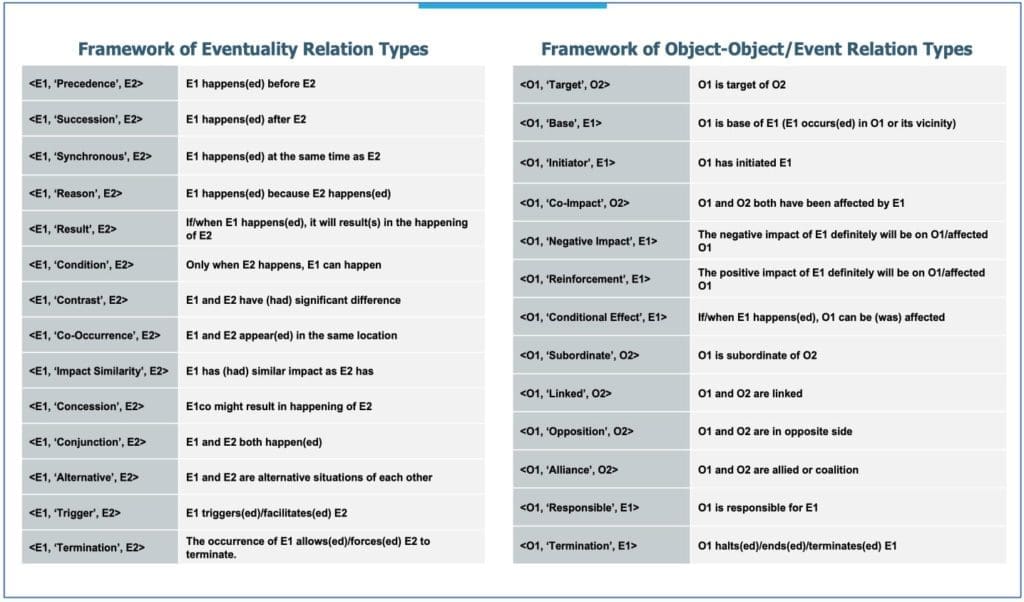

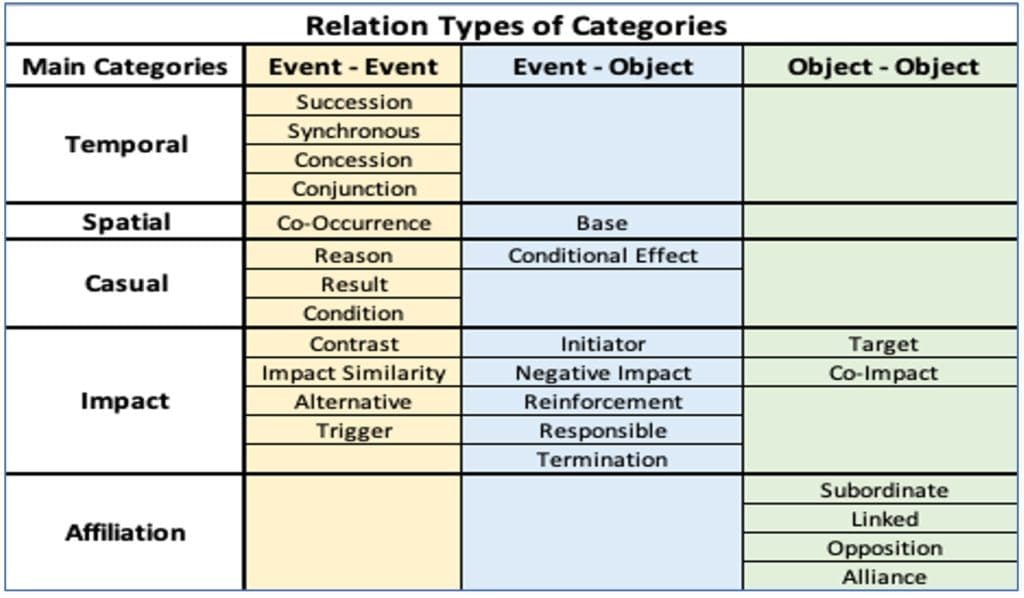

Based on an extensive study in the literature about relation types and relation extraction in network analysis, in line with dynamic ontology structure (primarily starting with events and objects), I have defined rule sets then frameworks of eventuality relation types and object-object & object-event relation types as seen in Figure 3 and 4.

Figure 3: Frameworks of Relation Types (Image by the Author)

Figure 4: Relation Types of Categories (Image by the Author)

Then I have applied these rules and framework of relation types to designated events and objects in the Smart Navigator databases and already recognised in the dynamic ontology structure as well. Since common-sense representation and relational reasoning are important to tackle challenging tasks in complex situation. More concretely the development of common-sense knowledge graphs is central to convert rule-based or pattern-based approach into neural-based approach. Because knowledge graph, a modern well-known knowledge form, describes and preserves the facts and can give behind-the-scenes and additional semantic information in addition to the input data. It has demonstrated its utility and importance in a variety of applications, including textual meaning understanding and logical reasoning.

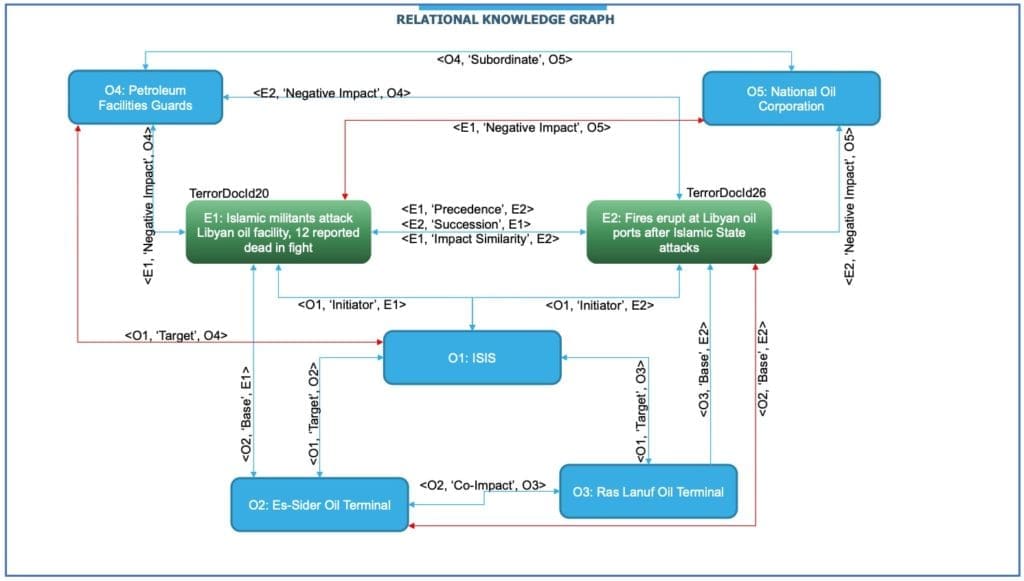

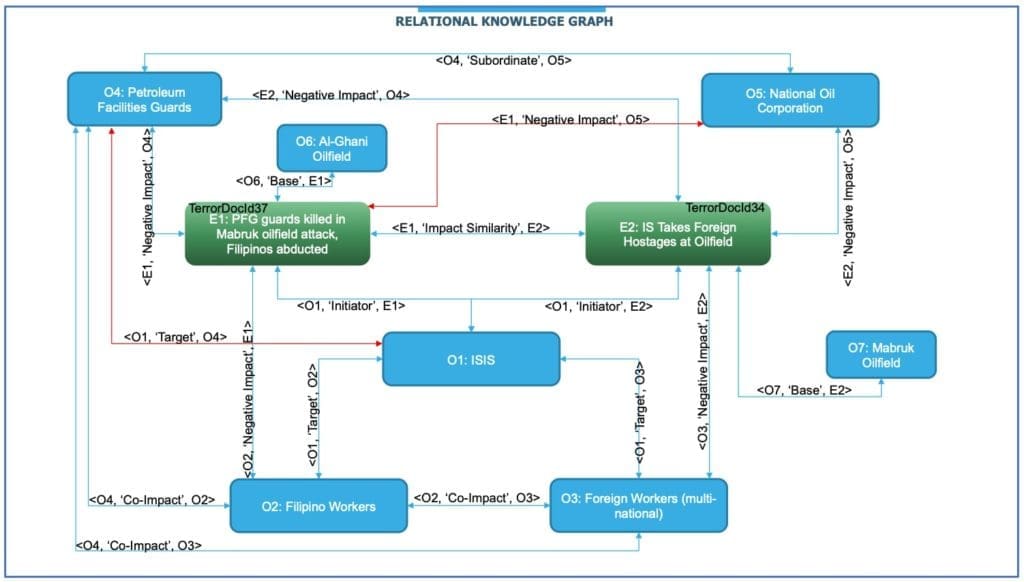

Therefore, the outcomes are visualised as in format of knowledge graph combined with rule-based approach. As the next step would be analysis of relation extraction approaches and defining the most useful ways to convert rule-based/pattern-based approach to neural-based approach. Here are some examples of the relational knowledge graphs (See Figure 5-7). Blue nodes represent objects, green ones symbolize events, and edges/links show relation types and directions.

Figure 5: Relational Knowledge Graph-1 (Image by the Author)

Figure 6: Relational Knowledge Graph-2 (Image by the Author)

Figure 7: Relational Knowledge Graph-3 (Image by the Author)

Graph-based techniques have been widely used to aid in network connectivity analysis, with the goal of comprehending the impacts and patterns of network events and objects. Existing techniques, on the other hand, suffer from a lack of connectivity-behaviour data as well as a loss of network event or object identification. Furthermore, when the networked data becomes larger and larger; and ontology structure becomes more and more complex; rule-based/pattern-based approached could be limited to extract relations and knowledge graphs would be insufficient to visualise the nodes, edges, and relations.

Therefore, I have investigated deep neural network architectures for event/object/document detection and relation extraction in a structured or unstructured data (texts) as a next step of the Smart Navigator project. The focus is on character-level (word-level), sentence-level, and document-level detection models and their links with relation extraction. Based on this widespread exploration, document-level detection rather than word-level or sentence-level detection; and two neural models, namely Convolutional Neural Networks (CNNs) and Graph Neural Networks (GNRs), seem like the best optimal ways to convert knowledge graph approach combined with rules & patterns into neural networks.

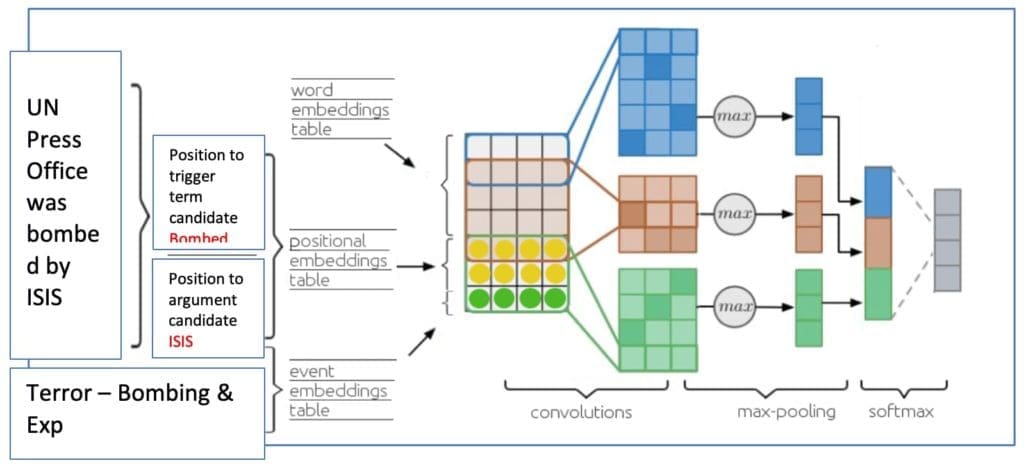

Current datasets for event/object/document and relation extraction do not contain all the variants for trigger keywords, either semantic variants with synonyms or morphological variants, but neural network models require a lot of training data. These variations are expected to be represented by word embeddings. As a result, I will investigate various word embedding models that are used as input to the neural-based model and will suggest a new architecture in continuation of the R&D process to account for morphological variants that are under-represented in the Smart Navigator databases. Figure 8 displays an example and simple steps for getting inputs and outputs.

Figure 8: An Example for Feeding the Neural Network for an NLP Task (Image by the Author)

Thinking of the example in Figure 8, a sequence of n words can be thought of as a matrix in which each entry is represented by a d-dimensional dense vector (word embedding), and therefore the input x is represented as a feature map of dimensionality n x d.

CNNs are feed-forward neural networks that use a convolutional layer followed by a pooling layer to construct their layers. This indicates that the first layers don’t use all the input features at the same time, but rather connected features. CNNs have recently been revitalized and successfully employed in numerous Natural Language Processing (NLP) applications, including relation classification and extraction, thanks to the growing interest in deep learning. CNNs seem a suitable choice for event detection since they collect global text representations, extract the most informative parts for the sequence of words, and only consider their resulting activations. Turning to the relation extraction problem, CNN is predicting whether a given document for a pair contains a relation or not, using a binary classification model. That a document has a relation, relation classification refers to predicting the connection class from a given ontology the document points to (modelled as a multi-class classification problem). For instance, after the prediction of the trigger word, the arguments can be mistakenly attached to the wrong event type. This issue can be overcome by implementing a max-pooling CNN applied to the word embeddings of a sentence, to obtain sentence-level features. The word embeddings of candidate words (candidate trigger, candidate argument) and the context tokens (left and right tokens of the candidate words) can be selected and concatenated. This can be injected in the main CNN, before prediction. Figure 9 indicates a CNN model which can be applied to NLP tasks.

Figure 9: A Conceptualised CNN Model for NLP (Image by the Author)

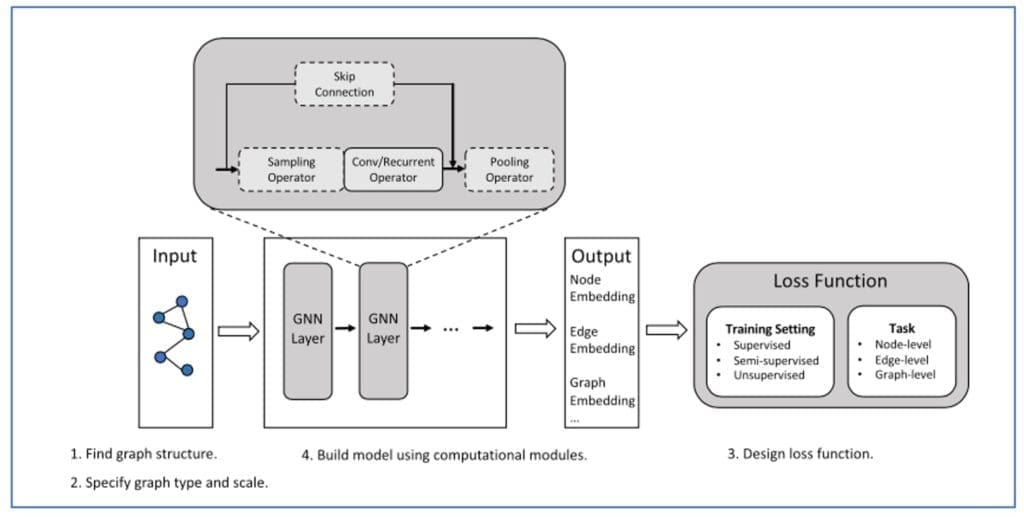

Turning to Graph Neural Networks (GNNs), they have been increasingly prominent in network analytic and beyond in recent years. As a result, their design differs noticeably from that of standard multi-layered neural networks. Especially for networked data, GNNs are employed in their most modern interpretation. For instance, text classification, recommendation systems, traffic prediction, computer vision, and other fields use supervised classifications and unsupervised embedding of graph nodes. Further, in the Smart Navigator project knowledge graph method (directed graph, which means that edges are all directed from one node to another, which provide more information than undirected graphs.) is currently used to display network analysis. Since the graph neural structure can easily be applied to molecules, physical systems, and knowledge graphs, this fact also supports the idea of using GNNs in our project.

Additionally, to build neural network models some computational modules such as propagation (spread information between nodes so that the aggregated information could seize both feature and topological information), sampling (when graphs are large, sampling modules are usually used) or pooling (representations of high-level subgraphs are needed) modules should be used. A typical GNN model is built by combining these computational modules which highly likely might enable GNN to obtain better representation of nodes, edges, and relations thus to extract relation information with high performance. Besides, in designing loss function, it is possible to use all training set types, namely supervised, semi-supervised, and unsupervised; and for graph learning tasks, all level tasks (node, edge, and graph levels) are feasible. In terms of the loss function, thinking of the Smart Navigator data sources, types and training sets and network analysis task levels, it seems that GNNs could be a feasible option for neural network transition. Figure 10 exemplifies a typical GNN model.

Figure 10: The General Design Pipeline for a GNN Model

Finally, at this stage in the Smart Navigator project, structured and unstructured data (texts) are used therefore it is important to understand potential performance of GNNs in Natural Language Processing tasks, particularly relation extraction. Comparing to traditional neural-based models, GNNs use a more sophisticated way, utilizing the dependency structure of the sentence. In GNNs, it is possible to build a document graph where nodes represent words and edges represent various relations such as adjacency, syntactic dependencies, discourse relations and the like. However, large-scale network group events or objects are often merged by several interconnected and complicated events and objects as in the Smart Navigator system. Thus, occurrences of such events and existence of these kinds of objects might hide the associated connections, and accordingly it is hard to discover differences or similarities between sub-graphs or hubs in networked multiple nodes, and to identify patterns in a very large network accordingly. To overcome these issues, GNNs provide specific capacities such as subgraph selection, similarity analysis, and frequent patterns mining for flow items. Furthermore, very recent studies demonstrate that GNNs can conduct reasoning very effectively on the fully connected graph with generated parameters from natural language. As document-level detection and relation extraction are projected in the Smart Navigator system, GNNs look like having great potential for better solutions in especially relation extraction based on document graph structure and relations or connectivity approach.

- Conclusion:

Even though new ways are emerging; detecting events, objects, or documents; and extracting relations from structured and unstructured data remains a challenge. Different modalities, including as texts, voice, photos, and videos, can be used to depict an event in the actual world. Using multi-modal data together could aid the event/object/document and relation extraction system in disambiguating and complementing information mutually. However, little research has been done on this subject, which involves representing multi-modal information in a unified semantic space and computing their alignments. In this frame, this study would fill the gap in the field and could attract more and more attentions in the future. Yet, a comprehensive study is needed to compare CNN and GNN in terms of performance in relation classification and relation extraction. Then, based on the results, one of these two neural networks or hybridity will used for the transition from rule-based/pattern-based approach to neural-based approach.

* CEO at Beyond the Horizon ISSG and Hybrid Core, PhD Researcher at the University of Antwerp and Non-Resident Fellow at New Jersey Institute of Technology