1. BACKGROUND

a. Recent EU Norms and Regulations

The Commission has been promoting and improving AI cooperation across the EU for years to improve productivity and ensure confidence based on EU values. Following the publication of the European Strategy on AI in 2018 and after extensive stakeholder consultation, the High-Level Expert Group on Artificial Intelligence (HLEG) developed Guidelines for Trustworthy AI in 2019 and an Assessment List for Trustworthy AI in 2020. In parallel, the first Coordinated Plan on AI was published in December 2018 as a joint commitment with the Member States. The Commission’s White Paper on AI, published in 2020, set out a clear vision for AI in Europe: paving way for an ecosystem of excellence and trust and setting the scene for today’s proposal. The public consultation on the White Paper on AI elicited widespread participation from across the world. The White Paper was accompanied by a ‘Report on the safety and liability implications of Artificial Intelligence, the Internet of Things and robotics’ concluding that the current product safety legislation contains several gaps that needed to be addressed, notably in the Machinery Directive.

The EU has recently had “Proposal for A Regulation of The European Parliament and Of the Council Laying Down Harmonized Rules on Artificial Intelligence (Artificial Intelligence Act) and Amending Certain Union Legislative Acts”.

b. Key Takeaways from the EU Norms and Regulations

· The main building blocks of the European AI approach are:

- The policy framework lays out steps to bring European, national, and regional initiatives closer together. The framework’s goal is to mobilize capital to build an “ecosystem of excellence” along the entire value chain, starting as early as research and development. The framework also aims to create the right incentives to promote the adoption of AI-based solutions, including by small and medium enterprises.

- The core components of a potential European regulatory structure for AI will establish a unique “trust ecosystem.” The Commission strongly supports a human-centric approach and considers the Ethics Guidelines prepared by the High-Level Expert Group on AI.

- The European data strategy aims to help Europe become the world’s most appealing, stable, and dynamic data-agile economy, enabling Europe to use data to improve decisions and improve the lives of all its people.

- The Communications outlines the terms “human-centric” and “human oversight”. But there is no significant emphasis on the “human-in-the-loop” approach and the emerging approach, hybrid intelligence.

- Regarding AI-powered decision making, the EU tries to build on a robust legal framework — in terms of data protection, fundamental rights, safety, and cybersecurity and its internal market with competitive companies of all sizes and varied industrial base. On the other hand, the missing point in these Communications is that making better decisions empowered by data in business and the public sector calls for cross-functional human-machine teaming and advanced human-machine interactions.

2. ANALYSIS

Europe has built a strong computing infrastructure (e.g., high-performance computers) critical to AI’s success. Europe also has sizable public and industrial data that isn’t being used to its full potential. It has well-established industrial strengths in the production of safe and stable digital systems with low power consumption, which are critical for the advancement of AI. Leveraging the EU’s capacity to invest in next-generation technologies and infrastructures, as well as digital competencies such as data-driven transformation and the data-agile economy will increase, Europe’s technical sovereignty in key enabling technologies and infrastructures for the data economy. However, competitors such as China and the United States are now innovating rapidly and projecting their data access and usage ideas around the world. Therefore, strengthening the EU’s role as a global actor, in addition to efforts in other domains, requires a unified and comprehensive approach to disruptive technologies. In this context, fragmentation between the Member States is a significant risk for the vision of common European information space and the development of next-generation technologies.

Turning to critical concerns raised by AI stated by the High-Level Expert Group on Artificial Intelligence set up by the European Commission, it is worth mentioning the most important ones in order to understand the scope of the threat as follows:

– Prohibited AI practices, for instance, manipulate persons through subliminal techniques beyond their consciousness or exploit specific vulnerable groups, or manipulate the free choice, or biometric identification systems, or predictive policing, which is overwhelming and oppressive,

– High-risk AI systems such as an AI system intended to be used as a safety component of products that are subject to third party ex-ante conformity assessment,

– Covert AI systems that do not ensure that humans are made aware of — or able to request and validate the fact that — they interact with an AI system,

– Security vulnerabilities of algorithmic decision systems due to high complexity that malign actors can exploit,

– Lethal autonomous weapon systems with cognitive skills to decide whom, when, and where to fight without human intervention.

To address these challenges of AI, hybrid intelligence could be a trustworthy and sustainable option. Hybrid intelligence refers to a perfect amalgam of human and artificial intelligence. Machine learning/artificial intelligence can make statistical inferences based on patterns found in previous cases and learning as the data input increases. Furthermore, such procedures allow the detection of complex trends in a model configuration as well as the interrelationships between single components, extending methods like simulations and scenarios. Still, they are unable to predict soft and subjective assessments of cases, such as the innovativeness of a value proposition, the vision or fit of the team, or the overall accuracy of a business model, which makes computer annotation of such data impossible.

Therefore, humans can become the gold standard for evaluating data that is difficult to annotate and train for machine learning models like creativity and innovation. Humans excel at making subjective judgments about data that is difficult to quantify objectively using statistical methods. Furthermore, human experts have well-organized domain expertise, allowing them to identify and interpret scarce data. On the other hand, as humans do have cognitive limitations, these can be mitigated through the hybrid intelligence process. This method combines the opinions of a wider community of people to minimize the noise and bias in individual assessments. Thus, by accessing more diverse domain information, incorporating it into an algorithm, and reducing the risk of biased interpretation, hybrid intelligence represents a proper way to supplement machine learning systems.

In other words, by integrating the complementary capabilities of humans and machines to produce superior results jointly and continually evolve by learning from each other, hybrid intelligence-powered decision support is highly likely to improve the outcomes of an individual’s decision-making activities. Further, hybrid intelligence is used to take advantage of human wisdom while minimizing the disadvantages of machines such as bias and random errors. This supports the idea that a hybrid system can perform as well as fully automated systems. Each phase that involves human interaction necessitates that the system is structured in such a way that humans can understand it and take the following action, as well as some human agency in deciding the critical steps. Further, humans and AI work together to complete the task, making it more explainable the operation. Such systems are valuable not only in terms of productivity and correctness but also in terms of human choice and agency. Continuous human interaction with the proper interfaces speeds up the marking of complex or novel data that a computer can’t handle, lowering the risk of data-related errors and automation biases.

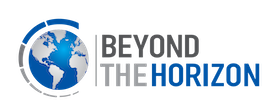

Hybrid intelligence-powered systems can ensure that data and models are correct, relevant, transparent, explainable, and cost-effective, particularly in case of complex problems. Especially for life-changing tasks, such as giving a visa or not, detecting and deciding a treatment, hybrid intelligence-powered decision systems can provide accountability and transparency compared to fully automated systems. All in all, hybrid intelligence-powered decision systems can outperform either solely AI or human-based solutions. However, developing such a decision system with high tech privacy and accuracy requires a highly talented cross-functional human-machine team and innovative approaches. Against this backdrop, to devise such a decision support system configuration, Figure 1 maps Dimensions of Decision Support (DDS) and Design Principles (DPs). In this configuration, DDS is defined as:

Informative Support: Decision support without any suggestion or imply how to act.

Suggestive Support: Decision support with suggestions on how to exercise.

Dynamic Support: Learning from the users and provide on-demand guidance.

Participative Support: Decision support based on users’ input (particularly for highly complex tasks).

Learning Support: Guidance that enables users to actively decide which information is needed and/or desired.

Knowledge Building: Generates new cognitive artifacts.

Visualization: Mental picture of knowledge.

Accordingly, DPs are proposed as follows:

DP 1: Provide the Hybrid Intelligence-Powered Decision Support System (HP DSS) with an ontology-based representation to transfer subject matter experts’ (SMEs) assumptions and inputs and create a shared understanding among machines and humans.

DP 2: Provide HP DSS with expertise matching through a recommendation system (such as a simple tagging system to match certain ontology models with specific domain experts to ensure high human guidance quality).

DP 3: Provide HP DSS with qualitative and quantitative feedback mechanisms to enable humans to offer adequate feedback.

DP 4: Provide HP DSS with a classifier (e.g., a Classification and Regression Tree) in order to predict the outcomes of model design options based on human calculation.

DP 5: Provide HP DSS with machine feedback capability in order to predict the outcomes of model design options based on machine outputs (e.g., feedforward artificial neural network, support vector regression, or recurrent neural network)

DP 6: Provide HP DSS with a knowledge-building repository to allow it to learn from the process.

DP 7: Provide HP DSS with a visualization tool allowing the users to access informative and suggestive decision support.

3. AS A CASE: PREDICTIVE CRISIS MANAGEMENT

Government, research, and business actors are increasingly relying on algorithmic decision-making. Many computer algorithms are built on conventional data analysis methods that employ statistical techniques to discover relationships between variables and then forecast outcomes. But as stated in the previous section, especially in highly complex and uncertain situations, due to shortages of algorithmic decision-making, hybrid intelligence-powered decision systems hold significant promise in serving better decision support in situations like conflict and crisis & operation management and law enforcement activities.

As the EU is in its pursuit to become a global actor, it is crucial to obtain high-value capacities and capabilities such as strategic foresight analysis, predictive crisis and operation management, gaming, and simulation. More importantly, every month, thousands of people are killed by large-scale political violence across the world, forcing many more to flee within countries and across borders. Armed conflicts have devastating economic effects, disrupt democratic systems’ ability to function, discourage countries from fleeing poverty, and obstruct humanitarian aid where it is most required. The difficulties of avoiding, minimizing, and adapting to large-scale crisis and conflicts are exacerbated when it occurs in unexpected places and at unexpected times. Needless to say, a system that provides early warning in all places at risk of conflict and assesses the likelihood of conflict onset, escalation, continuation, and resolution would be highly beneficial to the EU policymakers and first responders.

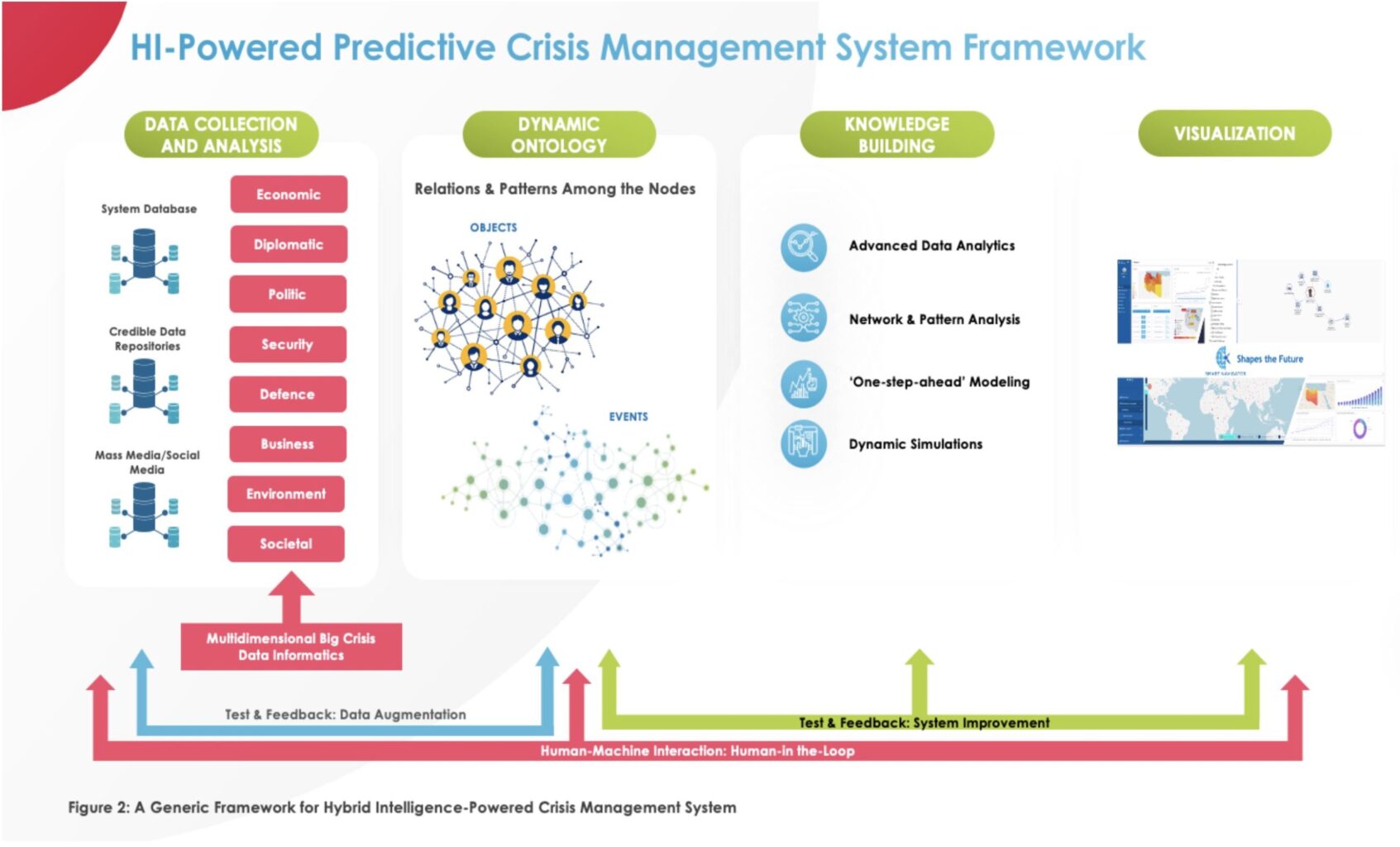

Undoubtedly, the uncertain nature of conflict and crisis is the main challenge in the design of decision-making architecture. To address the issue of dealing with a crisis or conflict by preparing for it ahead of time, a hybrid intelligence-powered crisis management system can provide a comprehensive framework that applies to the whole crisis life cycle, pre-crisis preparedness, during-crisis response, and post-crisis response. Thus, to define the functional behavioral patterns of actors and events, then to analyze patterns and relations, accordingly, to get early warning, to predict their future moves, and finally to detect, mitigate and prevent potential crisis and conflicts as well as the likelihood of crucial opportunities could be done systematically. Further, the system must ensure human-machine interactions and keep avenues for human-in-the-loop. Based on these arguments, the framework would have four main pillars:

– Employing a comprehensive data collection and analysis model,

– Building dynamic ontology structure and constructing networks by finding relations among the nodes of the ontology,

– Building knowledge extraction pipeline: using a unified detection approach that combines the proposed network topology and pattern recognition approaches, developing simulation models such as one-step-ahead modeling or dynamic simulations

– Developing visualization systems.

Figure 2 displays a generic framework for a hybrid intelligence-powered crisis management system. The majority of crisis analytics research has been done in retrospect, including studies on descriptive analytics and diagnostic analytics. Forward-looking analytics, such as predictive and prescriptive analytics, have received relatively little attention. Therefore, in line with this framework, the knowledge pipeline connecting the input layer to the output layer would consist of four fundamental steps: descriptive analysis (what happened?), diagnostic analysis (why did it happen?), predictive analysis (what will happen?), and finally prescriptive analysis (what should we do?).

To conclude, a hybrid intelligence-powered crisis management system would significantly transform the crisis and conflict management processes and the entire crisis informatics ecosystem. Multidimensional big crisis data informatics includes both vast amounts of data and an extensive range of data sources (which can consist of a variety of data types). Each of these large-scale crisis data sources offers a unique (but necessarily incomplete) view of what happened and why it happened on the ground. Dynamic ontology provides hybridization of recognition models for events and objects and ensures reusability with its unique structure. Knowledge building pillar includes predictive and prescriptive crisis analytics as well as simulations that would be core capacity for pre-emptive crisis and conflict management. Finally, visualization provides explainability and a friction-less interworking platform for various users and stakeholders, ensuring interoperability and harmonization in response and management efforts.

4. CONCLUSION and POLICY RECOMMENDATIONS

From the EU perspective, AI is a strategic technology that can contribute to the well-being of the people, businesses, and society as a whole if it is human-centric, ethical, and sustainable while still respecting fundamental rights and values. The European AI strategy aims to boost Europe’s AI innovation potential while promoting the growth and adoption of ethical and trustworthy AI in the EU economy. The latest EU norms and regulations give particular emphasis on trustworthiness, explainability, accuracy, and human involvement. Yet, when it comes to decision support or algorithmic decision systems, it seems that the EU AI perspective needs to be reviewed with a hybrid intelligence approach. Although some terms such as “human-centric” or “human oversight” have already been highlighted in recent EU communications, there is still a need for a deep understanding and comprehensive approach to utilizing hybrid intelligence-powered systems in Europe. In short, the EU must avoid operating an algorithmic black box inside what many in public perceive as the operational black box of the EU.

Therefore, the EU should seek to make increased use of hybrid intelligence in its internal processes and as an input to Commission decision-making and reviews of existing policy. To this end, the following policy recommendations to the EU are outlined as follows:

– A legislative framework for the governance of common European hybrid intelligence strategy and accordingly launching Hybrid Intelligence Act,

– Facilitate key enablers for investments in advanced human-machine interaction systems and strengthening Europe’s capabilities and infrastructures for hosting, processing, and using human-in-the-loop and interoperability

– Invest in high impact projects on European hybrid intelligence strategy, encompassing data sharing and human-machine interaction architectures (including standards, best practices, tools) and governance mechanisms, as well as the European federation of trustworthy hybrid intelligence and related services

– Empower universities, labs, research centers, startups, and small-medium enterprises to invest in the use of hybrid intelligence and interoperability between humans and machines

– Add new project calls encouraging the use of hybrid intelligence to the Horizon Europe project portfolio.

* CEO at Beyond the Horizon ISSG and Hybrid Core, PhD Researcher at the University of Antwerp and Non-Resident Fellow at New Jersey Institute of Technology